Investigating Depth Properties of

Deep Equilibrium Models

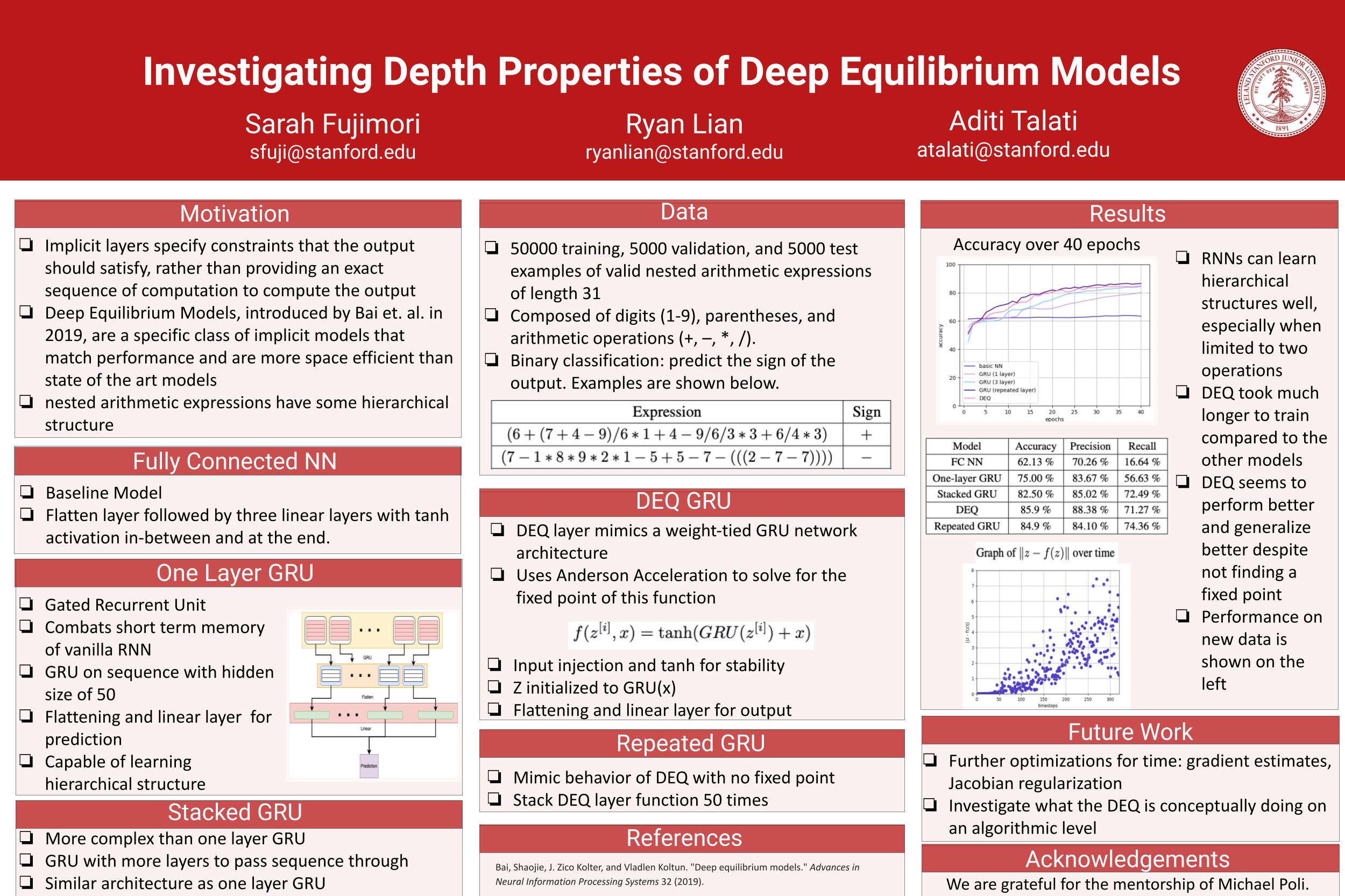

Researching a novel class of implicit neural models

Overview

I worked with two teammates to investigate the depth properties of Deep Equilibrium Models (DEQs), a new class of implicit DL sequential models that mimic "infinite" layer neural networks. I had zero prior ML experience and completed this initial research project in only 7 weeks, including literature review, learning PyTorch, Google Cloud Compute, Git, and more. The task I designed involved classifying symbolic arithmetic expressions allowed us to test the depth properties of DEQs. Our project was awarded "Best Project" in the hallmark graduate level course (my team was all freshmen), demonstrating my proficiency in DL sequential models and ability to learn, pick up, and creatively manipulate dense technical concepts and tools.

Context

Unlike traditional neural networks, which stack many layers of weights and nonlinearities, DEQs model the solution of a fixed-point equation that is a continuous analogue of residual networks. In other words, DEQs models a network where a “layer” is applied countless times until convergence, and thus an “infinite” layer network. These models have the potential to outperform traditional networks on tasks that require a deeper architecture, but most recent works have been with larger scale applications. Due to the compute limitations, we had to get creative to design a smaller scale experiment that still highlighted DEQs advantages and would allow us to explore further.

How can we investigate infinite depth property of DEQs on a smaller scale?

Coming into this project, I have never really touched machine learning or sequential modeling, PyTorch, Git, Google Cloud Compute (even the shell commands), Scikit-learn, and more. The class also did not teach us deep learning and even RNNs, so I had to research and learn EVERYTHING along the way. I also did not have an extensive CS background.

Everything was so uncertain for a long time and my friends all told me this was a mistake, but I just loved the content so much I clung on to finish this project!

Outcome

(Read the paper on the cover for a detailed explanation)

Custom Task